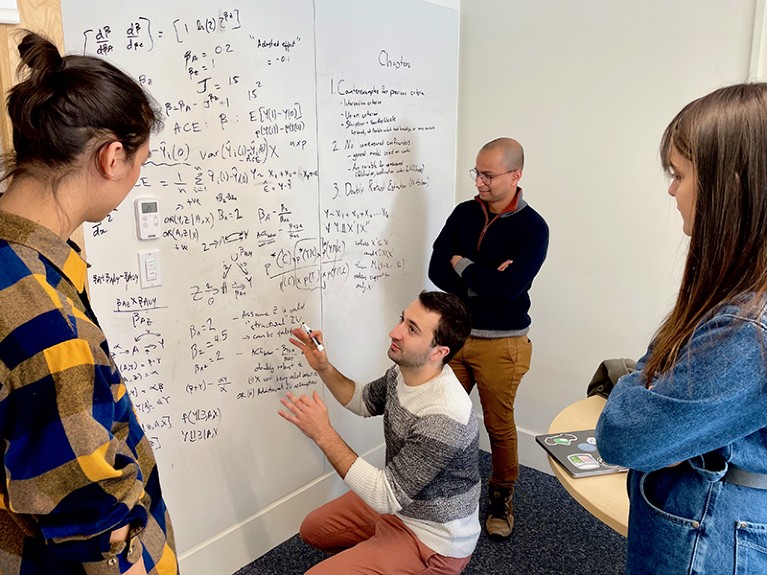

When Rohit Bhattacharya began his PhD in computer science, his aim was to build a tool that could help physicians to identify people with cancer who would respond well to immunotherapy. This form of treatment helps the body’s immune system to fight tumours, and works best against malignant growths that produce proteins that immune cells can bind to. Bhattacharya’s idea was to create neural networks that could profile the genetics of both the tumour and a person’s immune system, and then predict which people would be likely to benefit from treatment.

But he discovered that his algorithms weren’t up to the task. He could identify patterns of genes that correlated to immune response, but that wasn’t sufficient1. “I couldn’t say that this specific pattern of binding, or this specific expression of genes, is a causal determinant in the patient’s response to immunotherapy,” he explains.

Part of Nature Outlook: Robotics and artificial intelligence

Bhattacharya was stymied by the age-old dictum that correlation does not equal causation — a fundamental stumbling block in artificial intelligence (AI). Computers can be trained to spot patterns in data, even patterns that are so subtle that humans might miss them. And computers can use those patterns to make predictions — for instance, that a spot on a lung X-ray indicates a tumour2. But when it comes to cause and effect, machines are typically at a loss. They lack a common-sense understanding of how the world works that people have just from living in it. AI programs trained to spot disease in a lung X-ray, for example, have sometimes gone astray by zeroing in on the markings used to label the right-hand side of the image3. It is obvious, to a person at least, that there is no causal relationship between the style and placement of the letter ‘R’ on an X-ray and signs of lung disease. But without that understanding, any differences in how such markings are drawn or positioned could be enough to steer a machine down the wrong path.

For computers to perform any sort of decision making, they will need an understanding of causality, says Murat Kocaoglu, an electrical engineer at Purdue University in West Lafayette, Indiana. “Anything beyond prediction requires some sort of causal understanding,” he says. “If you want to plan something, if you want to find the best policy, you need some sort of causal reasoning module.”

Incorporating models of cause and effect into machine-learning algorithms could also help mobile autonomous machines to make decisions about how they navigate the world. “If you’re a robot, you want to know what will happen when you take a step here with this angle or that angle, or if you push an object,” Kocaoglu says.

In Bhattacharya’s case, it was possible that some of the genes that the system was highlighting were responsible for a better response to the treatment. But a lack of understanding of causality meant that it was also possible that the treatment was affecting the gene expression — or that another, hidden factor was influencing both. The potential solution to this problem lies in something known as causal inference — a formal, mathematical way to ascertain whether one variable affects another.

Computer scientist Rohit Bhattacharya (back) and his team at Williams College in Williamstown, Massachusetts, discuss adapting machine learning for causal inference.Credit: Mark Hopkins

Causal inference has long been used by economists and epidemiologists to test their ideas about causation. The 2021 Nobel prize in economic sciences went to three researchers who used causal inference to ask questions such as whether a higher minimum wage leads to lower employment, or what effect an extra year of schooling has on future income. Now, Bhattacharya is among a growing number of computer scientists who are working to meld causality with AI to give machines the ability to tackle such questions, helping them to make better decisions, learn more efficiently and adapt to change.

A notion of cause and effect helps to guide humans through the world. “Having a causal model of the world, even an imperfect one — because that’s what we have — allows us to make more robust decisions and predictions,” says Yoshua Bengio, a computer scientist who directs Mila – Quebec Artificial Intelligence Institute, a collaboration between four universities in Montreal, Canada. Humans’ grasp of causality supports attributes such as imagination and regret; giving computers a similar ability could transform their capabilities.

Climbing the ladder

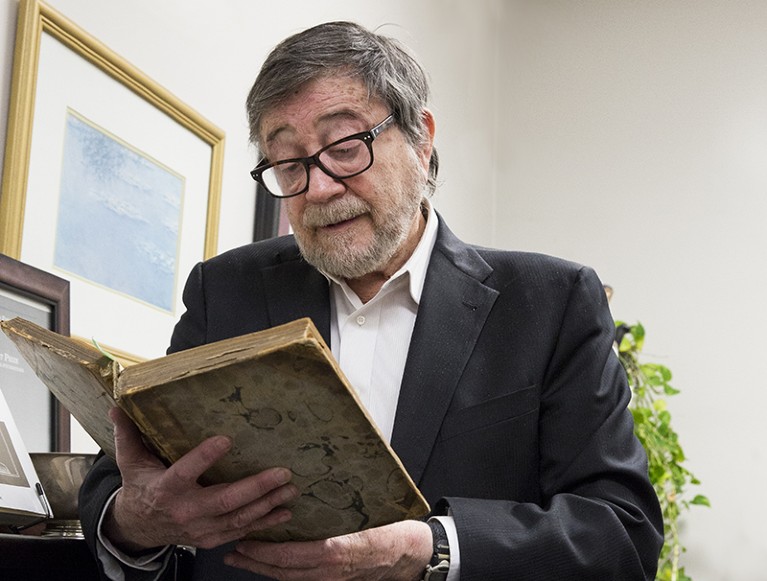

The headline successes of AI over the past decade — such as winning against people at various competitive games, identifying the content of images and, in the past few years, generating text and pictures in response to written prompts — have been powered by deep learning. By studying reams of data, such systems learn how one thing correlates with another. These learnt associations can then be put to use. But this is just the first rung on the ladder towards a loftier goal: something that Judea Pearl, a computer scientist and director of the Cognitive Systems Laboratory at the University of California, Los Angeles, refers to as “deep understanding”.

In 2011, Pearl won the A.M. Turing Award, often referred to as the Nobel prize for computer science, for his work developing a calculus to allow probabilistic and causal reasoning. He describes a three-level hierarchy of reasoning4. The base level is ‘seeing’, or the ability to make associations between things. Today’s AI systems are extremely good at this. Pearl refers to the next level as ‘doing’ — making a change to something and noting what happens. This is where causality comes into play.

A computer can develop a causal model by examining interventions: how changes in one variable affect another. Instead of creating one statistical model of the relationship between variables, as in current AI, the computer makes many. In each one, the relationship between the variables stays the same, but the values of one or several of the variables are altered. That alteration might lead to a new outcome. All of this can be evaluated using the mathematics of probability and statistics. “The way I think about it is, causal inference is just about mathematizing how humans make decisions,” Bhattacharya says.

Yoshua Bengio (front) directs Mila – Quebec Artificial Intelligence Institute in Montreal, Canada.Credit: Mila-Quebec AI Institute

Bengio, who won the A.M. Turing Award in 2018 for his work on deep learning, and his students have trained a neural network to generate causal graphs5 — a way of depicting causal relationships. At their simplest, if one variable causes another variable, it can be shown with an arrow running from one to the other. If the direction of causality is reversed, so too is the arrow. And if the two are unrelated, there will be no arrow linking them. Bengio’s neural network is designed to randomly generate one of these graphs, and then check how compatible it is with a given set of data. Graphs that fit the data better are more likely to be accurate, so the neural network learns to generate more graphs similar to those, searching for one that fits the data best.

This approach is akin to how people work something out: people generate possible causal relationships, and assume that the ones that best fit an observation are closest to the truth. Watching a glass shatter when it is dropped it onto concrete, for instance, might lead a person to think that the impact on a hard surface causes the glass to break. Dropping other objects onto concrete, or knocking a glass onto a soft carpet, from a variety of heights, enables a person to refine their model of the relationship and better predict the outcome of future fumbles.

Face the changes

A key benefit of causal reasoning is that it could make AI more able to deal with changing circumstances. Existing AI systems that base their predictions only on associations in data are acutely vulnerable to any changes in how those variables are related. When the statistical distribution of learnt relationships changes — whether owing to the passage of time, human actions or another external factor — the AI will become less accurate.

Sign up for Nature’s newsletter on robotics and AI

For instance, Bengio could train a self-driving car on his local roads in Montreal, and the AI might become good at operating the vehicle safely. But export that same system to London, and it would immediately break for a simple reason: cars are driven on the right in Canada and on the left in the United Kingdom, so some of the relationships the AI had learnt would be backwards. He could retrain the AI from scratch using data from London, but that would take time, and would mean that the software would no longer work in Montreal, because its new model would replace the old one.

A causal model, on the other hand, allows the system to learn about many possible relationships. “Instead of having just one set of relationships between all the things you could observe, you have an infinite number,” Bengio says. “You have a model that accounts for what could happen under any change to one of the variables in the environment.”

Humans operate with such a causal model, and can therefore quickly adapt to changes. A Canadian driver could fly to London and, after taking a few moments to adjust, could drive perfectly well on the left side of the road. The UK Highway Code means that, unlike in Canada, right turns involve crossing traffic, but it has no effect on what happens when the driver turns the wheel or how the tyres interact with the road. “Everything we know about the world is essentially the same,” Bengio says. Causal modelling enables a system to identify the effects of an intervention and account for it in its existing understanding of the world, rather than having to relearn everything from scratch.

Judea Pearl, director of the Cognitive Systems Laboratory at the University of California, Los Angeles, won the 2011 A.M. Turing Award.Credit: UCLA Samueli School of Engineering

This ability to grapple with changes without scrapping everything we know also allows humans to make sense of situations that aren’t real, such as fantasy movies. “Our brain is able to project ourselves into an invented environment in which some things have changed,” Bengio says. “The laws of physics are different, or there are monsters, but the rest is the same.”

Counter to fact

The capacity for imagination is at the top of Pearl’s hierarchy of causal reasoning. The key here, Bhattacharya says, is speculating about the outcomes of actions not taken.

Bhattacharya likes to explain such counterfactuals to his students by reading them ‘The Road Not Taken’ by Robert Frost. In this poem, the narrator talks of having to choose between two paths through the woods, and expresses regret that they can’t know where the other road leads. “He’s imagining what his life would look like if he walks down one path versus another,” Bhattacharya says. That is what computer scientists would like to replicate with machines capable of causal inference: the ability to ask ‘what if’ questions.

Imagining whether an outcome would have been better or worse if we’d taken a different action is an important way that humans learn. Bhattacharya says it would be useful to imbue AI with a similar capacity for what is known as ‘counterfactual regret’. The machine could run scenarios on the basis of choices it didn’t make and quantify whether it would have been better off making a different one. Some scientists have already used counterfactual regret to help a computer improve its poker playing6.

The ability to imagine different scenarios could also help to overcome some of the limitations of existing AI, such as the difficulty of reacting to rare events. By definition, Bengio says, rare events show up only sparsely, if at all, in the data that a system is trained on, so the AI can’t learn about them. A person driving a car can imagine an occurrence they’ve never seen, such as a small plane landing on the road, and use their understanding of how things work to devise potential strategies to deal with that specific eventuality. A self-driving car without the capability for causal reasoning, however, could at best default to a generic response for an object in the road. By using counterfactuals to learn rules for how things work, cars could be better prepared for rare events. Working from causal rules rather than a list of previous examples ultimately makes the system more versatile.

Using causality to program imagination into a computer could even lead to the creation of an automated scientist. During a 2021 online summit sponsored by Microsoft Research, Pearl suggested that such a system could generate a hypothesis, pick the best observation to test that hypothesis and then decide what experiment would provide that observation.

Right now, however, this remains a way off. The theory and basic mathematics of causal inference are well established, but the methods for AI to realize interventions and counterfactuals are still at an early stage. “This is still very fundamental research,” Bengio says. “We’re at the stage of figuring out the algorithms in a very basic way.” Once researchers have grasped these fundamentals, algorithms will then need to be optimized to run efficiently. It is uncertain how long this will all take. “I feel like we have all the conceptual tools to solve this problem and it’s just a matter of a few years, but usually it takes more time than you expect,” Bengio says. “It might take decades instead.”

More from Nature Outlooks

Bhattacharya thinks that researchers should take a leaf from machine learning, the rapid proliferation of which was in part because of programmers developing open-source software that gives others access to the basic tools for writing algorithms. Equivalent tools for causal inference could have a similar effect. “There’s been a lot of exciting developments in recent years,” Bhattacharya says, including some open-source packages from tech giant Microsoft and from Carnegie Mellon University in Pittsburgh, Pennsylvania. He and his colleagues also developed an open-source causal module they call Ananke. But these software packages remain a work in progress.

Bhattacharya would also like to see the concept of causal inference introduced at earlier stages of computer education. Right now, he says, the topic is taught mainly at the graduate level, whereas machine learning is common in undergraduate training. “Causal reasoning is fundamental enough that I hope to see it introduced in some simplified form at the high-school level as well,” he says.

If these researchers are successful at building causality into computing, it could bring AI to a whole new level of sophistication. Robots could navigate their way through the world more easily. Self-driving cars could become more reliable. Programs for evaluating the activity of genes could lead to new understanding of biological mechanisms, which in turn could allow the development of new and better drugs. “That could transform medicine,” Bengio says.

Even something such as ChatGPT, the popular natural-language generator that produces text that reads as though it could have been written by a human, could benefit from incorporating causality. Right now, the algorithm betrays itself by producing clearly written prose that contradicts itself and goes against what we know to be true about the world. With causality, ChatGPT could build a coherent plan for what it was trying to say, and ensure that it was consistent with facts as we know them.

When he was asked whether that would put writers out of business, Bengio says that could take some time. “But how about you lose your job in ten years, but you’re saved from cancer and Alzheimer’s,” he says. “That’s a good deal.”