“Organisms try not to process information that they don’t need to because that processing is very expensive, in terms of metabolic energy,” he says. Polani is interested in applying these lessons from biology to the vast networks that power robots to make them more efficient with their information. Reducing the amount of information a robot is allowed to process will just make it weaker depending on the nature of the task it’s been given, he says. Instead, they should learn to use the data they have in more intelligent ways.

Simplifying software

Amazon, which has more than 750,000 robots, the largest such fleet in the world, is also interested in using AI to help them make smarter, safer, and more efficient decisions. Amazon’s robots mostly fall into two categories: mobile robots that move stock, and robotic arms designed to handle objects. The AI systems that power these machines collect millions of data points every day to help train them to complete their tasks. For example, they must learn which item to grasp and move from a pile, or how to safely avoid human warehouse workers. These processes require a lot of computing power, which the new techniques can help minimize.

Generally, robotic arms and similar “manipulation” robots use machine learning to figure out how to identify objects, for example. Then they follow hard-coded rules or algorithms to decide how to act. With generative AI, these same robots can predict the outcome of an action before even attempting it, so they can choose the action most likely to succeed or determine the best possible approach to grasping an object that needs to be moved.

These learning systems are much more scalable than traditional methods of training robots, and the combination of generative AI and massive data sets helps streamline the sequencing of a task and cut out layers of unnecessary analysis. That’s where the savings in computing power come in. “We can simplify the software by asking the models to do more,” says Michael Wolf, a principal scientist at Amazon Robotics. “We are entering a phase where we’re fundamentally rethinking how we build autonomy for our robotic systems.”

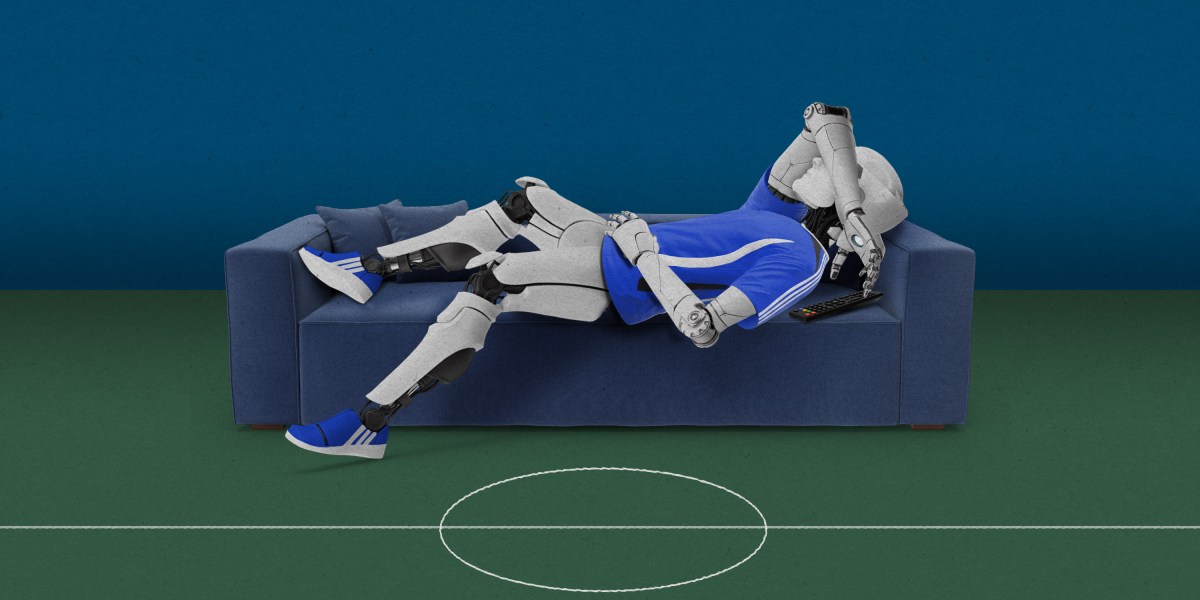

Achieving more by doing less

This year’s RoboCup competition may be over, but Van de Molengraft isn’t resting on his laurels after his team’s resounding success. “There’s still a lot of computational activities going on in each of the robots that are not per se necessary at each moment in time,” he says. He’s already starting work on new ways to make his robotic team even lazier to gain an edge on its rivals next year.

Although current robots are still nowhere near able to match the energy efficiency of humans, he’s optimistic that researchers will continue to make headway and that we’ll start to see a lot more lazy robots that are better at their jobs. But it won’t happen overnight. “Increasing our robots’ awareness and understanding so that they can better perform their tasks, be it football or any other task in basically any domain in human-built environments—that’s a continuous work in progress,” he says.