Inside a “clean room” within ASML’s sprawling campus in the Dutch town of Veldhoven, dozens of men and women in hazmat suits are breathing air that is 10,000-times more purified than in an operating theatre.

They are working on the first prototype of the chip toolmaker’s newest product: the latest generation of extreme ultraviolet photolithography machines that will be used to “print” transistors almost as small as the diameter of a human chromosome on to sheets of silicon to make a semiconductor. This EUV machine is due to ship to Intel this year at a cost of more than €350mn.

Without ASML’s lithography machines, products as ubiquitous as Apple’s iPhones or as sophisticated as the Nvidia chips that power ChatGPT would be impossible. Only three companies in the world — Intel, Samsung and TSMC — are capable of manufacturing the advanced processors that make these products possible; all rely on ASML’s cutting-edge equipment to do so.

The innovations achieved by ASML have ensured that transistors have continued to shrink, thereby making chips more powerful. The pace of progress in the technology industry over the past five decades has been made possible by exponential increases in semiconductor transistor density.

This rate of production was predicted by the Intel co-founder Gordon Moore, who said in 1965 that the number of transistors incorporated in a chip will approximately double every year — a projection, later revised to every two years, that came to be known as Moore’s Law.

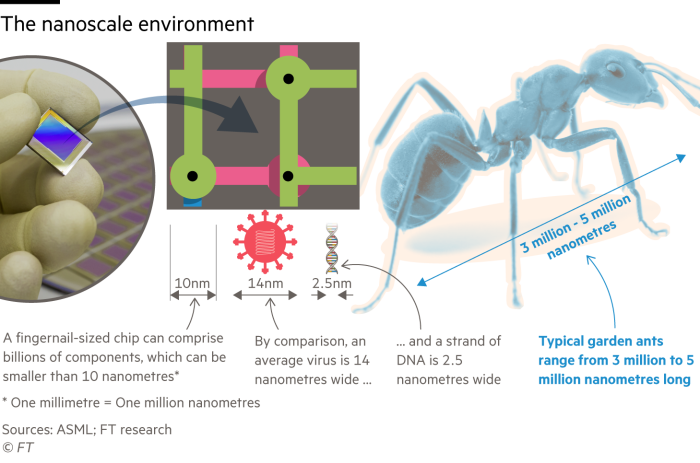

While Intel itself was responsible for much of that progress, through its relentless innovations in semiconductor manufacturing and process engineering, it is now ASML that is seen as keeping Moore’s Law alive, helping manufacture chips the size of a fingernail that can hold about 50bn transistors.

“What’s driven Moore’s Law? It’s basically lithography,” says Jamie Mills O’Brien, an investment manager at Abrdn, a top 50 investor in ASML.

That shift in value is reflected in the two companies’ stock market valuations. ASML, listed in Amsterdam and New York, is now worth roughly twice as much as Intel. The US chip pioneer largely missed the last decade’s megatrends in smartphones and AI because it was unable to keep pace with Taiwanese chipmaker TSMC, itself one of the earliest adopters of ASML’s EUV technology.

However, chipmakers now face a daunting challenge. Moore’s prediction has fallen behind schedule and the cadence is now closer to three years. The latest 3-nanometre chips being mass produced for this year’s iPhones will be followed by what some see as an even bigger leap forward to 2nm by 2025. “But once you get to 1.5nm, maybe 1nm, Moore’s Law is 100 per cent dead,” says Ben Bajarin, a technology analyst at Silicon Valley-based Creative Strategies. “There’s just no way.”

Chip engineers have defied forecasts of an end to Moore’s Law for years. But the number of transistors that can be packed on to a silicon die is starting to run into the fundamental limits of physics. Some fear manufacturing defects are rising as a result; development costs already have. “The economics of the law are gone,” says Bajarin.

That has sent chip designers into a scramble over the past few years for alternative ways to sustain advances in processing power, ranging from new design techniques and materials to using the very AI enabled by the latest chips to help design new ones.

At stake is not just sustaining a pace of innovation that has underpinned the tech industry and, by extension, continued economic growth and radical improvements to our everyday lives for decades. If developments from AI and the “metaverse” to long-promised advances in clean energy and autonomous transportation are to live up to their potential, then chips need to keep getting cheaper, more powerful and more efficient.

“Someday it has to stop,” Moore, who died in March, warned in 2015 as his original paper marked its 50th anniversary. “No exponential like this goes on forever.”

Exponential growth

If ASML is keeping Moore’s Law intact, it is having to spend billions — while achieving unfathomable feats of physics and engineering — to do so.

Spun out of Philips in the 1980s, ASML started its operations in a portacabin in the car park of its parent company’s site in Eindhoven, a small city of less than 300,000 residents. Today, ASML is Europe’s most valuable tech company, with a market capitalisation of about €275bn.

From its headquarters in Veldhoven, just a few kilometres away from that portacabin, ASML produces machines capable of vaporising tiny droplets of molten tin up to 50,000 times a second, creating a 13.5nm wavelength of light. This EUV light is then bounced off a series of mirrors inside a vacuum chamber, narrowed and focused until it hits a silicon wafer.

“Moore’s Law is a vehicle of economics: every two to three years you can double the performance at the same cost,” says ASML’s chief executive, Peter Wennink.

But, he adds, “there’s another Moore’s Law function nobody talks about: the Moore’s Law of complexity. Every two to three years there is a new generation of chips. It’s not getting easier. The complexity also goes up exponentially.”

The company’s high numerical aperture (NA) machine is the latest output of its huge research and development investment, which rose 30 per cent to €3.3bn in 2022. High-NA essentially expands the numerical aperture — or range of angles — over which the light can be bent and emitted, allowing it to create smaller transistor patterns on a wafer.

ASML has just five customers for its existing EUV machines — TSMC in Taiwan, Samsung and SK Hynix in South Korea, and Intel and Micron in the US. All of them have ordered the latest model.

The Dutch company’s monopoly on EUV machines (it is also the largest producer of deep ultraviolet, or DUV, machines, which are crucial for production of the larger and more ubiquitous chips found in cars and household appliances) has won it fans on Wall Street as well as in Silicon Valley.

ASML’s profits have more than doubled over the past five years, with a corresponding 300 per cent rise in its share price since mid-2018.

Despite currently being caught up in a fierce geopolitical battle between the US and China, and a wider slowdown in demand for chips after the pandemic-driven boom created an inventory glut, ASML is betting on a doubling in the size of the semiconductor market in the coming years: from $600bn today to up to $1.3tn by 2030.

It has a $40bn backlog of orders to prove demand is still resilient and plans to invest more than €4bn in R&D by 2025 to sustain its pace of innovation.

If the end of Moore’s Law threatens all that, Wennink does a good job of pretending otherwise. He is “not concerned at all” about it, he says, but concedes that expectation of continuous progress is ASML’s “biggest competitor”.

“We are the most expensive machine in the production process,” he says. “If we cannot provide our customers with the ability to reduce cost or increase value, our customers will find other ways.”

Extending Moore’s Law

Chip designers have already begun to plan for such an eventuality. “There are many tools in the toolbox to cram more transistors in,” says Nigel Toon, chief executive of UK-based AI chip start-up Graphcore.

“Moore’s Law may have ended, but that doesn’t mean innovation has died,” says Hassan Khan, an executive fellow at Carnegie Mellon University who leads the US National Network for Critical Technology Assessment’s work on semiconductors and supply chains.

“In the public sphere there is a conflation of Moore’s Law and technical progress, as if the only thing that drives innovation is cheaper transistors. Humans are smart at finding bottlenecks and finding ways around them.”

After several decades where the “central processing unit” pioneered by Intel enabled the creation of general-purpose, Swiss Army Knife-style computers such as PCs and smartphones, “the link between hardware and software is coming back”, says Ondrej Burkacky, who co-leads McKinsey’s semiconductors practice.

Perhaps the most widely distributed example of this is the iPhone.

Bespoke chips are a large part of how Apple has been able to continue to differentiate its smartphones from those running Google’s Android operating system. Apple is able to develop specific iPhone software features in concert with its in-house silicon design team, which now runs into thousands of people. That is harder for Android manufacturers because Google’s software has to support thousands of different makes of phone, from cheap basic handsets to Samsung’s latest flagship model costing upwards of $1,000.

Apple fine-tunes the industry-standard smartphone chips designed by UK-based Arm to ensure faster performance or longer battery life for its iPhones. It got so good at it, it was able to replace Intel with a custom Arm-based chip for its Macs in 2020.

As designers seek ever-higher performance for particular tasks, some companies are going even further in rethinking chip “architectures”, or the fundamentals of how processors are designed and packaged. Companies like Graphcore can “start with a clean sheet of paper”, says Toon. “You have to think more about the right architecture for the right application.”

Nvidia, now the world’s most valuable semiconductor company with a market capitalisation that hit $1tn this week, cut its teeth on niche graphics cards for video gamers and research scientists before striking gold when its graphics processing units became a must-have for every AI company. Both graphics and AI are matched well to Nvidia’s “parallel processing” technology, which is built to handle repetitive tasks such as rendering polygons or crunching algorithms.

For the first 30 years of Nvidia’s existence, according to its chief and co-founder Jensen Huang, “we were the company you called to solve nearly impossible problems”.

The issue was that the volumes involved in catering to research sectors such as computational biology and similar domains were “tiny, every one of ’em”, he tells the Financial Times. “Our company’s business was characterised as: ‘We solve the zero billion dollar problems’,” he says. “Then all of a sudden, Moore’s Law ended . . . Now we’re the ‘if you wanna grow’ computing company.”

However, one consequence of chip innovation being more narrowly focused is that any breakthroughs tend to be more zealously guarded and less transferable to the wider market.

“Through the 1990s and early 2000s, cost per transistor and the ability to build more complex chips was roughly free to the entire industry,” says Khan. “[Now] computation is less of a general purpose technology . . . If I’m optimising chips for AI, that might make GPT more efficient or powerful but it may not spill over into the rest of the economy.”

Another key delta of innovation is in chip “packaging”. Instead of printing every component on to the same piece of silicon, to create what’s known as a “system on a chip”, semiconductor companies are now talking up the potential for “chiplets” that allow smaller “building blocks” to be mixed and matched, opening up new flexibility in design and component sourcing.

Intel has described chiplets as “the key to extending Moore’s Law through the next decade and beyond” and last year brought together a consortium of chipmakers and designers, including TSMC, Samsung, Arm and Qualcomm, to establish standards for building these Lego-like processors.

Richard Grisenthwaite, chief architect at chip designer Arm, says that one of the benefits of chiplets as compared to “monolithic” traditional chips is that companies can combine complex and expensive processors with older and cheaper ones. The trick, he cautions, is ensuring that the added expense of packaging multiple components together is more than offset by the savings from using some older, inexpensive parts.

A question of physics

But fresh ideas come with fresh obstacles. A key challenge of many of these innovations, says McKinsey’s Burkacky, is that they tend to make for larger chips, which in turn creates a greater surface area for imperfections.

“A defect is typically an impurity — a particle from the air or the chemical process that drops on the surface, that can kill the functionality of a chip,” he says. “The probability is higher when the chip is bigger.”

That can have fatal implications for semiconductor manufacturers’ production yields, which Burkacky says can drop to 40 to 50 per cent, making a costly process even more economically challenging.

Bigger and more powerful chips can also consume more electricity, creating so much heat inside a data centre that radically different and more energy-intensive cooling systems — such as immersion cooling — are required to achieve top performance.

But at the other end of the scale, smaller chips may also be causing reliability problems, according to some researchers. A team at Google in 2021 published a paper entitled “Cores that don’t count”, after its data centre engineers noticed what they described as “mercurial” behaviour by chips buried in their vast data centres.

“As [chip] fabrication pushes towards smaller feature sizes . . . we have observed ephemeral computational errors that were not detected during manufacturing tests,” the Google engineers, led by Peter Hochschild, wrote. “Worse, these failures are often ‘silent’’: the only symptom is an erroneous computation.”

Hochschild concluded that the “fundamental cause” was “ever-smaller feature sizes” that push closer to the limits of silicon scaling, coupled with “ever-increasing complexity in architectural design”.

“So far, [sustaining Moore’s Law] was an industrialisation challenge,” says Burkacky. “I don’t want to downplay that — it was super tricky and hard — but we are reaching a point where now we are talking physics . . . We are getting down to a single atom. So far in physics, that is the end of the story.”

One day, quantum computers may deliver a long-promised leap forward in computing power akin to the silicon advances that began in the 1960s. But even the most bullish quantum advocates concede it will probably take more than a decade to make them useful for practical, everyday computing tasks.

In the meantime, Toon is optimistic that chips like Graphcore’s will be able to unlock new kinds of advances.

“My view of it is . . . we’ll build computers so powerful, and AI so powerful, that we’ll be able to actually understand how molecules work and then we’ll start using those AI computers to build molecular computers,” he says.

“This idea of the singularity [when AI overtakes human intelligence] is bollocks, but the idea that you can use AI to create a next frontier of computing is perfectly practical.”

Additional reporting by Richard Waters and Madhumita Murgia

Graphic illustrations by Ian Bott